Silicon transistors

During the 1950s, meanwhile, scientists and engineers at Bell Labs and Texas Instruments were developing advanced technologies needed to produce silicon transistors. Because of its higher melting temperature and greater reactivity, silicon was much more difficult to work with than germanium, but it offered major prospects for better performance, especially in switching applications. Germanium transistors make leaky switches; substantial leakage currents can flow when these devices are supposedly in their off state. Silicon transistors have far less leakage. In 1954 Texas Instruments produced the first commercially available silicon junction transistors and quickly dominated this new market—especially for military applications, in which their high cost was of little concern.

In the mid-1950s Bell Labs focused its transistor development efforts around new diffusion technologies, in which very narrow semiconductor layers—with thicknesses measured in microns, or millionths of a metre—are prepared by diffusing impurity atoms into the semiconductor surface from a hot gas. Inside a diffusion furnace the impurity atoms penetrate more readily into the silicon or germanium surface; their penetration depth is controlled by varying the density, temperature, and pressure of the gas as well as the processing time. (See integrated circuit: Fabricating ICs.) For the first time, diodes and transistors produced by these diffusion implantation processes functioned at frequencies above 100 megahertz (100 million cycles per second). These diffused-base transistors could be used in receivers and transmitters for FM radio and television, which operate at such high frequencies.

Another important breakthrough occurred at Bell Labs in 1955, when Carl Frosch and Link Derick developed a means of producing a glassy silicon dioxide outer layer on the silicon surface during the diffusion process. This layer offered transistor producers a promising way to protect the silicon underneath from further impurities once the diffusion process was finished and the desired electrical properties had been established.

Texas Instruments, Fairchild Semiconductor Corporation, and other companies took the lead in applying these diffusion technologies to the large-scale manufacture of transistors. At Fairchild, physicist Jean Hoerni developed the planar manufacturing process, whereby the various semiconductor layers and their sensitive interfaces are embedded beneath a protective silicon dioxide outer layer. The company was soon making and selling planar silicon transistors, largely for military applications. Led by Robert Noyce and Gordon E. Moore, Fairchild’s scientists and engineers extended this revolutionary technique to the manufacture of integrated circuits.

In the late 1950s Bell Labs researchers developed ways to use the new diffusion technologies to realize Shockley’s original 1945 idea of a field-effect transistor (FET). To do so, they had to overcome the problem of surface-state electrons, which would otherwise have blocked external electric fields from penetrating into the semiconductor. They succeeded by carefully cleaning the silicon surface and growing a very pure silicon dioxide layer on it. This approach reduced the number of surface-state electrons at the interface between the silicon and oxide layers, permitting fabrication of the first successful field-effect transistor in 1960 at Bell Labs—which, however, did not pursue its development any further.

Refinements of the FET design by other companies, especially RCA and Fairchild, resulted in the metal-oxide-semiconductor field-effect transistor (MOSFET) during the early 1960s. The key problems to be solved were the stability and reliability of these MOS transistors, which relied upon interactions occurring at or near the sensitive silicon surface rather than deep inside. The two firms began to make MOS transistors commercially available in late 1964.

In early 1963 Frank Wanlass at Fairchild developed the complementary MOS (CMOS) transistor circuit, based on a pair of MOS transistors. This approach eventually proved ideal for use in integrated circuits because of its simplicity of production and very low power dissipation during standby operation. Stability problems continued to plague MOS transistors, however, until researchers at Fairchild developed solutions in the mid-1960s. By the end of the decade, MOS transistors were beginning to displace bipolar junction transistors in microchip manufacturing. Since the late 1980s CMOS has been the technology of choice for digital applications, while bipolar transistors are now used primarily for analog and microwave devices.

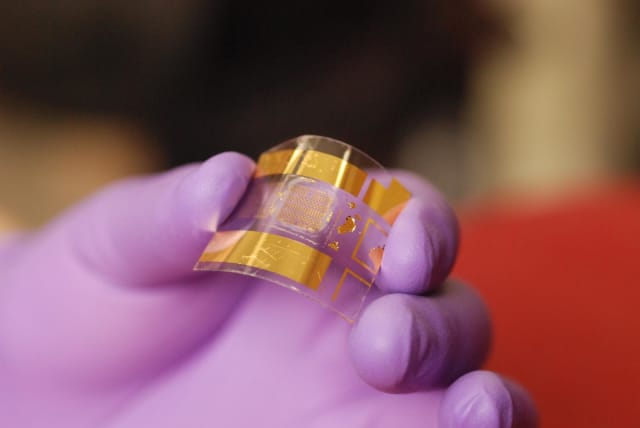

CMOSA complementary metal-oxide semiconductor (CMOS) consists of a pair of semiconductors connected to a common secondary voltage such that they operate in opposite (complementary) fashion. Thus, when one transistor is turned on, the other is turned off, and vice versa.

CMOSA complementary metal-oxide semiconductor (CMOS) consists of a pair of semiconductors connected to a common secondary voltage such that they operate in opposite (complementary) fashion. Thus, when one transistor is turned on, the other is turned off, and vice versa.

|

CMOSA complementary metal-oxide semiconductor (CMOS) consists of a pair of semiconductors connected to a common secondary voltage such that they operate in opposite (complementary) fashion. Thus, when one transistor is turned on, the other is turned off, and vice versa. Image Courtesy Encyclopædia Britannica, Inc. |

Transistor Principles

The p-n junction :

The operation of junction transistors, as well as most other semiconductor devices, depends heavily on the behaviour of electrons and holes at the interface between two dissimilar layers, known as a p-n junction. Discovered in 1940 by Bell Labs electrochemist Russell Ohl, p-n junctions are formed by adding two different impurity elements to adjacent regions of germanium or silicon. The addition of these impurity elements is called doping. Atoms of elements from Group 15 of the periodic table (which possess five valence electrons), such as phosphorus or arsenic, contribute an electron that has no natural resting place within the crystal lattice. These excess electrons are therefore loosely bound and relatively free to roam about, acting as charge carriers that can conduct electrical current. Atoms of elements from Group 13 (which have three valence electrons), such as boron or aluminum, induce a deficit of electrons when added as impurities, effectively creating “holes” in the lattice. These positively charged quantum mechanical entities are also fairly free to roam around and conduct electricity. Under the influence of an electric field, the electrons and holes move in opposite directions. During and immediately after World War II, chemists and metallurgists at Bell Labs perfected techniques of adding impurities to high-purity silicon and germanium to induce the desired electron-rich layer (known as the n-layer) and the electron-poor layer (known as the p-layer) in these semiconductors, as described in the section Development of transistors.

|

| electron hole: movementMovement of an electron hole in a crystal lattice.Image Courtesy Encyclopædia Britannica, Inc. |

A p-n junction acts as a rectifier, similar to the old point-contact crystal rectifiers, permitting easy flow of current in only a single direction. If no voltage is applied across the junction, electrons and holes will gather on opposite sides of the interface to form a depletion layer that will act as an insulator between the two sides. A negative voltage applied to the n-layer will drive the excess electrons within it toward the interface, where they will combine with the positively charged holes attracted there by the electric field. Current will then flow easily. If instead a positive voltage is applied to the n-layer, the resulting electric field will draw electrons away from the interface, so combinations of them with holes will occur much less often. In this case current will not flow (other than tiny leakage currents). Thus, electricity will flow in only one direction through a p-n junction.

Junction transistors

|

| Image Courtesy Encyclopædia Britannica, Inc. |

Shortly after his colleagues John Bardeen and Walter H. Brattain invented their point-contact device, Bell Labs physicist William B. Shockley recognized that these rectifying characteristics might also be used in making a junction transistor. In a 1949 paper Shockley explained the physical principles behind the operation of these junctions and showed how to use them in a three-layer—n-p-n or p-n-p—device that could act as a solid-state amplifier or switch. Electric current would flow from one end to the other, with the voltage applied to the inner layer governing how much current rushed by at any given moment. In the n-p-n junction transistor, for example, electrons would flow from one n-layer through the inner p-layer to the other n-layer. Thus, a weak electrical signal applied to the inner, base layer would modulate the current flowing through the entire device. For this current to flow, some of the electrons would have to survive briefly in the presence of holes; in order to reach the second n-layer, they could not all combine with holes in the p-layer. Such bipolar operation was not at all obvious when Shockley first conceived his junction transistor. Experiments with increasingly pure crystals of silicon and germanium showed that it indeed occurred, making bipolar junction transistors possible.

|

| cross section of an n-p-n transistorAn n-p-n transistor and its electronic symbol.Image Courtesy Encyclopædia Britannica, Inc. |

MOS-type transistors

A similar principle applies to metal-oxide-semiconductor (MOS) transistors, but here it is the distance between source and drain that largely determines the operating frequency. In an n-channel MOS (NMOS) transistor, for example, the source and the drain are two n-type regions that have been established in a piece of p-type semiconductor, usually silicon. Except for the two points at which metal leads contact these regions, the entire semiconductor surface is covered by an insulating oxide layer. The metal gate, usually aluminum, is deposited atop the oxide layer just above the gap between source and drain. If there is no voltage (or a negative voltage) upon the gate, the semiconductor material beneath it will contain excess holes, and very few electrons will be able to cross the gap, because one of the two p-n junctions will block their path. Therefore, no current will flow in this configuration—other than unavoidable leakage currents. If the gate voltage is instead positive, an electric field will penetrate through the oxide layer and attract electrons into the silicon layer (often called the inversion layer) directly beneath the gate. Once this voltage exceeds a specific threshold value, electrons will begin flowing easily between source and drain. The transistor turns on.

Analogous behaviour occurs in a p-channel MOS transistor, in which the source and the drain are p-type regions formed in n-type semiconductor material. Here a negative voltage above a threshold induces a layer of holes (instead of electrons) beneath the gate and permits a current of them to flow from source to drain. For both n-channel and p-channel MOS (also called NMOS and PMOS) transistors, the operating frequency is largely governed by the speed at which the electrons or holes can drift through the semiconductor material divided by the distance from source to drain. Because electrons have mobilities through silicon that are about three times higher than holes, NMOS transistors can operate at substantially higher frequencies than PMOS transistors. Small separations between source and drain also promote high-frequency operation, and extensive efforts have been devoted to reducing this distance.

In the 1960s Frank Wanlass of Fairchild Semiconductor recognized that combinations of an NMOS and a PMOS transistor would draw extremely little current in standby operation—just the tiny, unavoidable leakage currents. These CMOS, or complementary metal-oxide-semiconductor, transistor circuits consume significant power only when the gate voltage exceeds some threshold and a current flows from source to drain. Thus, they can serve as very low-power devices, often a million times lower than the equivalent bipolar junction transistors. Together with their inherent simplicity of fabrication, this feature of CMOS transistors has made them the natural choice for manufacturing microchips, which today cram millions of transistors into a surface area smaller than a fingernail. In such cases the waste heat generated by the component’s power consumption must be kept to an absolute minimum, or the chips will simply melt.

Article Courtesy Encyclopædia Britannica, Inc.